Enterprises are deploying generative AI at scale under security assumptions that were designed for slower systems, longer response cycles, and encryption models expected to hold for decades. That gap is narrowing fast. According to security leaders working at the intersection of AI and quantum computing, the issue is no longer whether quantum capabilities will matter, but how quickly they invalidate assumptions already embedded in enterprise AI deployments.

During a soon-to-be-released AI-360 webinar, Ryan Cloutier, a quantum security and AI security readiness specialist at ArcQubit, discussed his previously given statement: “AI compresses the attacker loop. Quantum compresses the defender timeline.” The combination, he argued, creates a structural shift in security dynamics that enterprises are not yet fully accounting for.

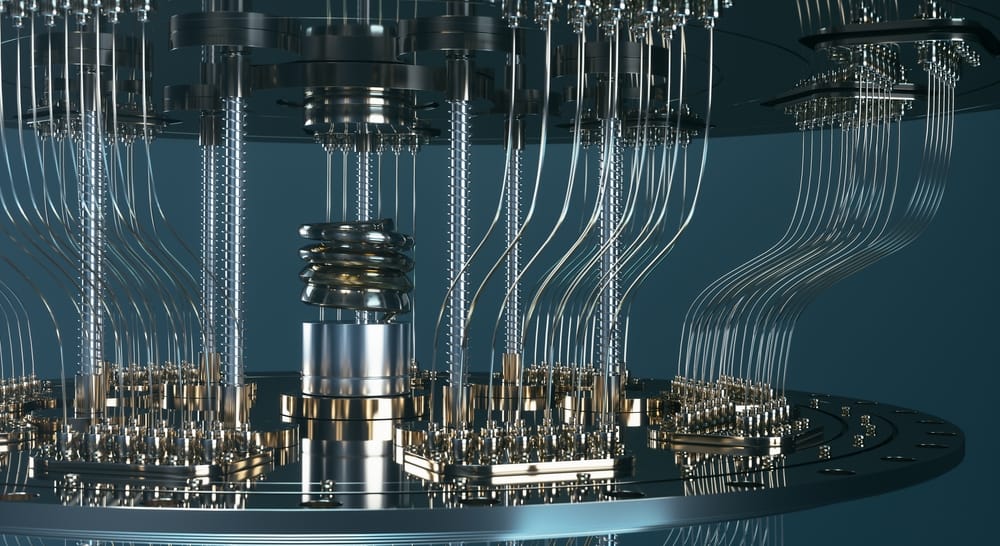

GenAI systems are already embedded across customer service, internal knowledge management, software development, analytics, and decision support. At the same time, quantum computing has moved from long-term theory toward nearer-term operational reality. Cloutier pointed to recent demonstrations of room-temperature quantum operation as evidence that timelines once measured in decades are now measured in years, or less. “We’re not talking about something that’s ten years away,” he said. “This has realistic availability to attackers.”

The operational consequence is speed. Traditional security models assume some breathing room between discovery and exploitation: a zero-day vulnerability, a patch window, a remediation cycle. Quantum changes that math. “With quantum, that window goes from days or weeks to seconds, potentially nanoseconds,” Cloutier said. In that environment, defenders cannot rely on reactive controls or periodic updates. Systems must be built to operate securely by default, under constant pressure.

This compression effect matters because GenAI dramatically expands the amount of valuable data moving through enterprise systems. Prompts, conversation histories, agent-to-agent messages, uploaded documents, and intermediate reasoning steps all represent new forms of sensitive data. Much of it is created in real time, often outside traditional data classification or retention frameworks.

Hervé Le Jouan, co-founder and CEO of Filtar.ai, said regulated industries are increasingly aware of the exposure this creates. “This is no longer theoretical for big companies,” he said, referencing banks and telecoms on both sides of the Atlantic. “They understand that it may happen tomorrow.”

For years, enterprises have relied on a simple premise: encrypted data, even if stolen, does not constitute a breach. That assumption underpins breach disclosure rules, liability calculations, and risk acceptance decisions. Quantum computing disrupts that logic through what security practitioners call “harvest now, decrypt later.” Attackers can collect encrypted data today and wait until quantum capabilities are sufficient to decrypt it.

“What happens when that data gets decrypted?” Cloutier asked. “You’ll be held to today’s standards, not the standards from a decade ago.” He warned of what he described as “live, post-breach recoil,” where data exfiltrated years earlier suddenly becomes legally and operationally actionable.

GenAI amplifies this risk because it increases both the volume and sensitivity of data being generated. Le Jouan noted that enterprises rushed into GenAI adoption without fully accounting for downstream consequences. “No one really thought through the consequence of deliberately putting information into prompts, or having agents access data,” he said. In banking and healthcare, where data retention horizons span decades, that oversight is becoming harder to justify.

The challenge is compounded by shadow AI. When organizations restrict or delay official GenAI deployments, employees often turn to personal accounts and unmanaged tools. “The employee is using a personal device and doing things on behalf of the company,” Le Jouan said. “The company has no visibility.” Even when enterprises provide licensed tools, misconfiguration and weak guardrails can result in data flowing outside intended boundaries.

This creates a paradox. Blocking AI use reduces visibility and control. Allowing unrestricted use increases exposure. Neither approach aligns with quantum-era risk models, where any leaked data may remain exploitable indefinitely.

The conversation also surfaced a broader architectural issue: non-deterministic systems are increasingly being embedded into environments that demand predictability. AI is now influencing credit decisions, medical diagnostics, infrastructure management, and automated customer interactions. “When AI starts getting embedded into systems that impact health, life, and safety, the security calculation changes massively,” Cloutier said.

He argued that enterprises need to borrow from safety engineering disciplines long used in industrial systems. “Where is the pressure release valve in a data processing system?” he asked. In mechanical systems, failure modes are designed and tested. In AI systems, especially agentic ones, failure behavior is often opaque.

Agent-to-agent architectures introduce additional complexity. As AI systems begin coordinating tasks autonomously, permissioning, trust, and monitoring become harder to enforce. “You can have a trusted agent handing poisoned output into production without realizing it,” Cloutier said, describing coordinated agent attacks that evade traditional content filters.

Le Jouan emphasized that governance must extend beyond individual prompts or outputs, boiling it down to context, rights, and explainability. Enterprises will need to understand who initiated an action, what data was accessed, what policies applied, and how decisions propagated across systems. This is not only a security requirement but an audit and compliance necessity.

Hallucinations further blur the line between quality and security. Confidently wrong outputs can trigger regulatory, legal, and financial consequences. Cloutier went further to point out that misinformation in the wrong hands, say an attorney or a doctor, is in fact a security issue. He warned that such errors can cascade, shaping decisions long after the original output is corrected.

Underlying many of these risks is data integrity. “AI does nothing except work on the data it’s trained on to get the prediction out,” Le Jouan said. Poor labeling, weak access controls, or poisoned datasets undermine reliability and trust. In that sense, hallucinations, bias, and compliance failures often trace back to failures in data governance rather than model design.

The strategic implication for enterprise leaders is not panic, but reprioritization. Quantum readiness, post-quantum cryptography, AI governance, and data lifecycle management can no longer be treated as parallel initiatives. They are converging into a single risk surface.

Enterprises that continue to deploy GenAI assuming long encryption lifetimes, delayed breach consequences, and human-paced attack cycles may find those assumptions invalidated quietly and retroactively. The risk is not that quantum arrives suddenly. It is that organizations are already behaving as if it never will.